How to Monetize Your n8n Template: From Prompt to Report

How I built a AI- and n8n-powered workflow that turns simple queries into structured biomedical reports — all with DeepMed AI.

How I built a AI- and n8n-powered workflow that turns simple queries into structured biomedical reports — all with DeepMed AI.

If you’ve been following my Medium journey, you’ll know I’ve been obsessed with finding ways to apply AI, especially LLMs, to my own field of biomedical research.

After reflecting deeply, it hit me: as biomedical scientists, we spend a massive amount of time searching for literature and writing reports — grant applications, internal summaries, research papers… you name it.

That’s exactly where LLMs shine.

So when I discovered n8n and Jim_Le’s “Deep Research” Template (note that this feature was firsts released by OpenAI and it costs $200/month) I was genuinely excited. Finally, there was a base I could build on to tailor AI workflows to our unique biomedical needs!

Of course, I didn’t stop at the general template. I spent time refining and customizing it:

- Focused Prompting for Biomedical Research

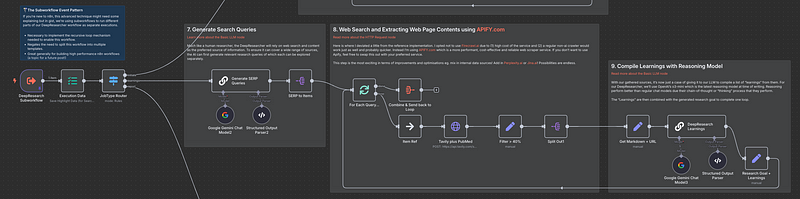

I rewrote the prompts to narrow down specifically on biomedical topics. I created a custom report structure so the LLM (currently mainly Gemini 2.5 Pro) knows exactly how to format the outputs — clean and logical. I designed sections like Introduction, Methods, Results, and Conclusion to mimic academic papers, which makes the final report feel much more familiar to researchers. - Targeted Domain Search (PubMed First!)

To increase accuracy, I restricted the web search to PubMed only. The plan is to expand later into other specialized biomedical databases like BioRxiv or even clinical trial registries for a broader scope.

By narrowing the search scope and giving the AI a clear structure, the quality of outputs improved significantly — almost to the point where you feel like you’re reading a junior researcher’s first draft!

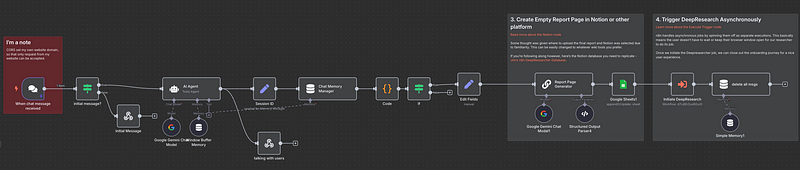

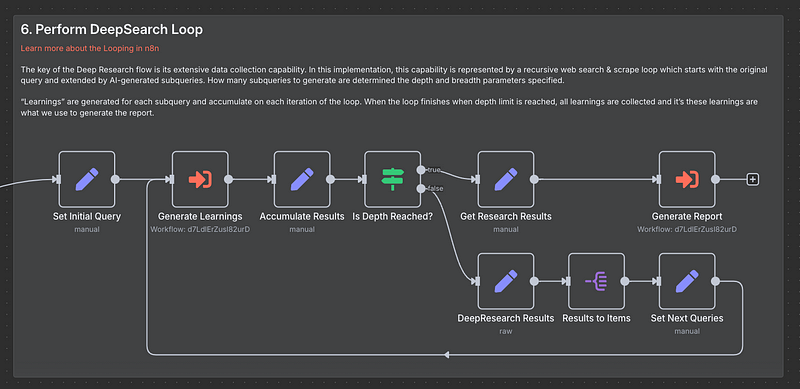

The 4-Step Workflow Logic

Let me break down how the whole flow works:

- User Interaction & Clarification

The user interacts with the chatbot to refine their research question. Through follow-up questions, the workflow helps users think more deeply about their topic — often surfacing angles they hadn’t considered.

- Research Trigger

Based on the conversation between AI and researcheres, the system determines the depth of search needed and initiates the process. For example, if the user wants a broad overview, it limits the number of queries. If they want deep, niche research, it goes full power into extensive searching.

- Web search and Data Processing

The bot searches PubMed and extracts relevant content. It typically finds over 20 publications and filters them by relevance to the topic. Step 2 usually involves at least three follow-up questions, which means the system searches through over 60 publications at this stage.

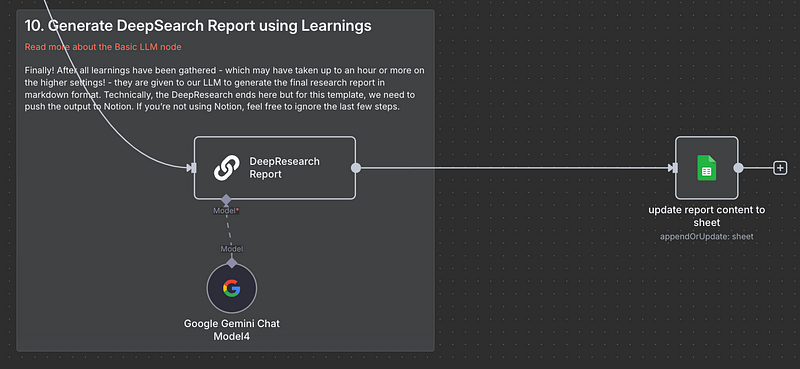

- Report Generation

Finally, the workflow compiles the findings into a structured report and saves it directly into Google Sheets for easy review and future reference. I also set up automatic formatting in Sheets so users are able to download their report in PDF file format.

Building Frontend and Backend (Yes, I Became a “Full-Stack Developer”)

To make it more user-friendly, I used Google AI Studio and ChatGPT to build both a front-end web page and a backend linked to Google Sheets.

Honestly, even I was surprised: in this AI era, it feels like anyone can become a full-stack developer!

The front-end is a simple but elegant web interface where users can input their research queries and get instant feedback. On the backend, Google Sheets acts as the database, keeping all the reports well-organized. Later, I plan to integrate more professional databases and maybe even cloud storage solutions.

I even launched my project, DeepMed AI, on Product Hunt!

If you’re curious about my full Product Hunt journey, check out here.

What’s Next: Beyond a Fun Side Project

While DeepMed AI is a great side project, I know it’s just the beginning.

If I truly want to solve real-world problems in pharma (e.g. protein structure prediction)or the IVD (e.g. biomarker detection) industry, I need to go further — especially if I want my tool to compete with existing solutions (such as tools introduced here) and meet professional standards for extracting and analyzing content from a PDF file.

That’s why I’ve recently started studying Python and SQL. My goal? To grow from an automation builder into a data scientist who can tackle serious biomedical business challenges. Python will allow me to build more advanced data pipelines, and SQL will help manage larger datasets effectively — two critical skills if I want to move beyond simple workflows to enterprise-grade solutions.

If you’re curious about this next chapter, stay tuned — I’ll be sharing my learning journey here too!